Agentic SaaS Playbook 2026

1. Executive Summary

A practical mental model for building agentic products end-to-end

If you're looking for specific playbook, additional research, or hands-on support building your product, you can write me at agentnativedev (at) gmail (dot) com.

In this guide, I'll use planes as a practical way to reason about agentic products end-to-end: UX, orchestration, runtime, memory, data, integrations, security, and observability.

You can think of each plane as a lens.

A place where specific failure modes show up and where specific investments pay off. It's also worth distinguishing architecture styles from patterns.

A style is a named bundle of defaults, i.e. how components are arranged, communicate, and deploy, and what trade-offs you inherit. A pattern is a contextual solution to a recurring problem.

In agentic systems you'll use many patterns, but you'll still end up living inside a handful of styles and their constraints.

2. Architectural Planes of Agentic SaaS

The planes below are a way to keep structure legible. Each plane is where different constraints dominate and different “build vs buy” decisions make sense.

UXUser interaction, trust, adoption, “articulation burden”ControlDeterministic execution substrate: routing, state, tool contracts, gatesRuntimeLatency envelope, isolation boundary, retries, cost modelMemoryKnowledge, episodic, profile memory, caching, provenanceDataExternal data sources & tool integration surfacesSecurityGovernance, tool firewall, policy-as-code, isolationObservabilityTracing, evaluation, monitoring, debugging, cost attribution

Which plane most directly reduces the “articulation burden” of chat-only products?

User Experience Plane (UI/UX)

Adoption, cost control, and credibility live here

Most agentic products fail because the experience makes the smart thing hard to access, hard to trust, or hard to justify. In practice, UX breakdowns very quickly compound.

Small friction points across onboarding, mental models, guardrails, and handoffs add up to a slow-motion collapse.

That's why the UI/UX plane is your strategy for adoption, cost control, and credibility.

A good rule of thumb for 2026: start with the user, not the algorithm. Before you choose model stacks or “agent frameworks,” look at how people do the job today:

- What are they trying to accomplish?

- What feels slow, risky, or annoying?

- Slow: too many steps

- Risky: easy to make mistakes

- Annoying: context switching

- Where do they already live (Slack, email, CRM, ticketing systems)?

- Start with the smallest change that helps

- Even if that change is non-AI

Sometimes that answer is “no AI required.”

And even when AI is involved, the product should be sold and understood in terms of user outcomes, not “we used LLM X.”

Borrowed surfaces beat bespoke UIs early

In the earliest stages, “frontend tax” is real. Building bespoke UIs can delay the launch by months.

So teams often meet users where they already work: Slack, Microsoft Teams, email, or the system-of-record (e.g., an ITSM tool, CRM, or ticketing platform). You get instant distribution, familiar interaction patterns, and you can iterate quickly.

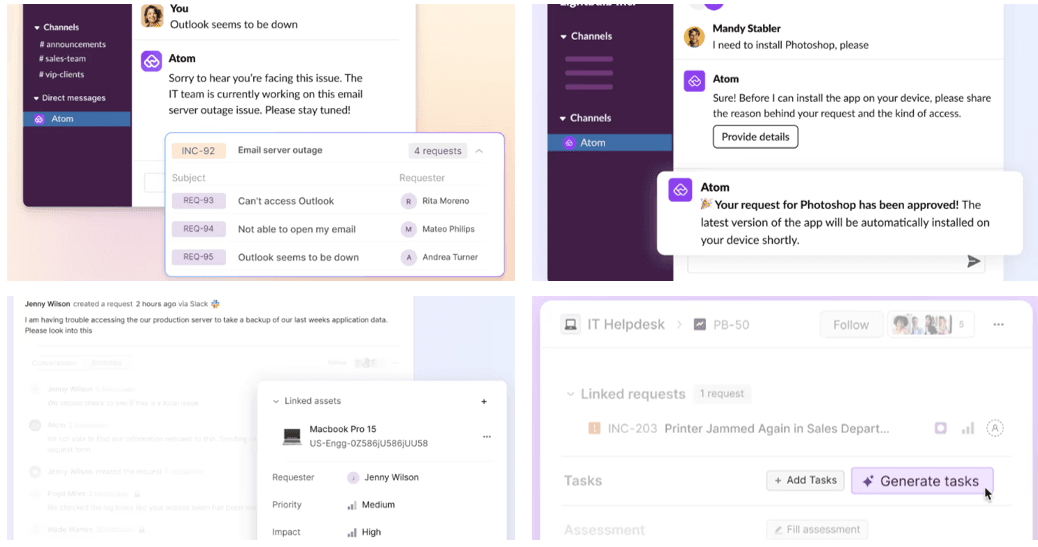

A good example is Atomicwork's agentic service-management platform launched with Slack and Microsoft Teams integrations. Employees can interact through Slack while the service management layer and agentic workflows run behind the scenes.

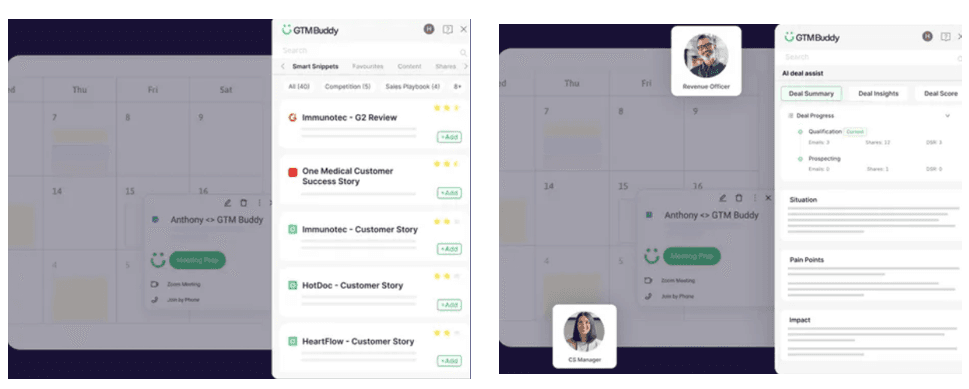

Another example is GTM Buddy: agents meet end-users where they work by embedding into Salesforce, Gmail, Outlook, Slack, and Teams—so users don't have to toggle between tools.

Source: GTM Buddy

This pattern is especially sensible if you're racing toward a funding milestone: you can prove value without building a UI fortress first.

Chat is an amazing entry point but a weak information architecture. Users must (1) know what the system can do and (2) express it well. That “articulation burden” is where ROI quietly dies.

People either don't ask, ask the wrong thing, or don't trust what they get back.

Borrowed surfaces also come with a hidden invoice:

- You inherit the platform’s interaction model, which is great for quick intake but weak for complex work

- You inherit the platform’s constraints. Data retention rules, UI limitations, API policy changes.

- You risk becoming “a bot” instead of “a product.” Users don’t build trust in bots the way they build trust in tools.

Here are some design moves that can reduce early-stage failure without building a full UI:

Assist + handoffDon't try to “replace experts.” Draft the response, propose next steps, show reasoning, ask for approval.Make cost visibleAdd friction where it matters (confirmations, approvals, scoped actions) and remove it where it doesn't (quick replies).Micro-interactionsButtons, forms, menus, and “suggested next steps” become lightweight IA inside chat for repeatable tasks.

A mature pattern is hybrid where you keep Slack/Teams as the fast “first touchpoint,” and provide a dedicated web/mobile experience as the trustworthy “control center” for governance, persistence, multi-step workflows, and differentiation.

Beyond bots: dashboards, workflows, and trust for growth

Chat can handle intake but operations require structure.

Support workflows are the clearest example, e.g. Zendesk's Slack integration lets teams create tickets inside Slack via shortcuts/actions but resolution still lives inside the structured system behind it.

This is why, as startups grow and move into Series B, they often develop dedicated agent interfaces. The bottleneck shifts from “can we ship?” to “can users reliably get value every day?”

Once you're there, richer patterns become worth the effort:

- Multi-step workflows with checkpoints

- Persistent histories and task states.

- Approvals, audit trails, and governance

- Personalization and role-based views

- Multi-agent coordination (handoffs between agents and humans)

Relevance AI is one example of the “agent OS” direction with dedicated surfaces plus integrations, positioning agents as managed workforce components, the team raised a US$24M Series-B round late 2024.

Source: Relevance AI

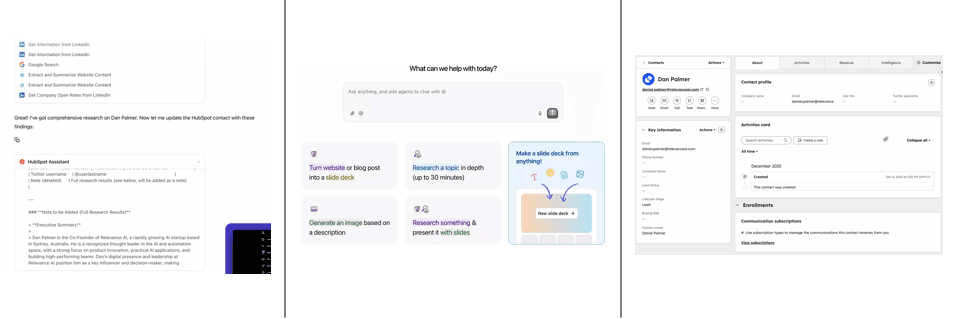

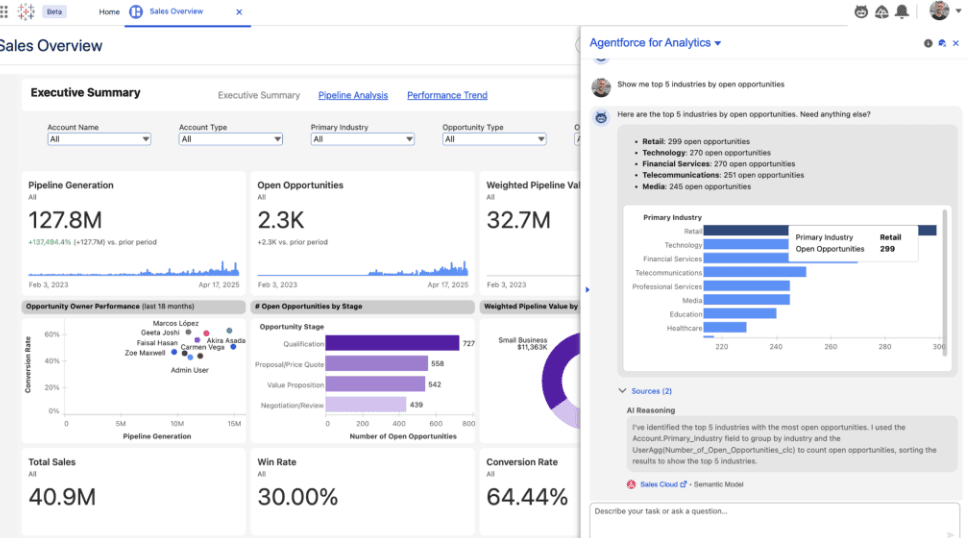

You can also see more of predictive onboarding, chat-based dashboards, and voice assistants in areas such as fintech or healthcare. You can go much beyond simple Slack bots and build dashboards that display agent state, analytics, and contextually appropriate actions.

Source: Tableau

- Persistence

- Governance

- Differentiation

- Mental model users learn

Two UX practices that pay off

Storyboard firstBefore you pixel-push, sketch the flow: who's the protagonist, what triggers the interaction, what “success” looks like, and where the agent must not operate autonomously. It's the fastest way to catch “wrong use case” problems early.Agent narrativeDesign the story users experience: what the agent can do, what it's doing now, what it needs from them, and what happens next. Without a narrative, even a capable agent feels random.

Hybrid wins at Series-C and enterprise

By Series-C (and certainly in enterprise), the winning pattern is almost always hybrid:

KeepSlack/Teams for speed, reach, and convenienceAddA dedicated web/mobile experience for depth, governance, and differentiation

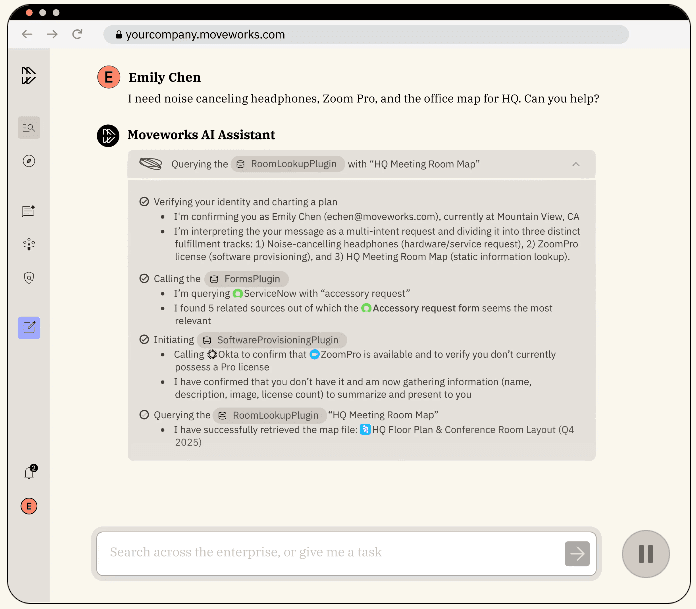

Moveworks is a well-known example of an agentic IT support surface that works through enterprise messaging tools like Microsoft Teams and Slack for convenience, while still supporting richer, structured experiences for ticketing, workflows, and analytics.

Source: Moveworks

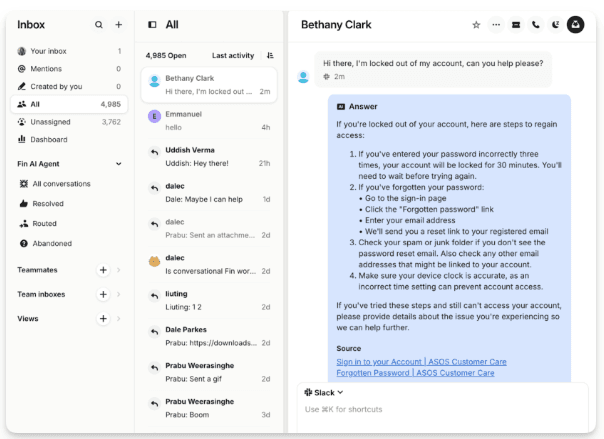

Established SaaS products like Intercom also shows the same maturity curve. Intercom's support system, powered by its Fin AI agent, connects Slack channels so that support agents see tickets in both Intercom and Slack, with real‑time sync of statuses and conversation histories.

What changes in UX emphasis (late stage)

ExpectationsUsers need a simple, explicit contract, i.e. what the agent can do, what it can't, and what it will ask before acting.TransparencyShow sources, assumptions, and the “because” behind recommendations to earn trust.Error handlingSafe retries, graceful fallbacks, clear escalation, and “undo” matter more than cleverness.AutonomyStart with suggestions, then move to guarded execution, then expand autonomy as trust and reliability prove out.Data restraintCollect less by default, ask permission when you must and be explicit about retention and access. Users notice.

- Start with borrowed surfaces (Slack/Teams/CRM) to validate value quickly.

- Add lightweight IA inside chat (structured actions, suggested flows, guardrails) to reduce articulation burden and misuse.

- Build a dedicated agent hub for persistence, multi-step workflows, governance, and brand differentiation.

- Land on a hybrid model where chat is the fast “front door,” and your UI is the trustworthy “control center.”

If you're building agentic SaaS, the key is not picking “Slack bot vs bespoke UI” as a permanent identity. It's recognizing where you are on the maturity spectrum, then designing the smallest UX system that makes:

- Value discoverable

- Outcomes trustworthy

- Human cost aligned with business benefit

Developer experience is part of UI/UX

If you're building an agentic platform (not just an app) where developers consume your services, your “UI plane” includes developer-facing surfaces too:

- SDKs

- CLIs

- Self-service panels

- Workflows

- Admin controls

- Diagnostics

- Rollout tooling

- Support

You often optimize for four developer outcomes: time-to-first-success (onboarding), time-to-confidence (predictability), time-to-debug (observability), and time-to-recover (safe change + rollback). The companies below win because they compress those timelines aggressively.

DX patterns that correlate with adoption

Integration contractMake the platform's behavior legible: inputs/outputs, tool scopes, permissioning, rate limits, cost signals, and failure modes. Developers ship faster when the “contract” is clear enough to reason about and test.TraceabilityGive developers run traces they can debug: tool calls, retrieved context, state transitions, approvals, retries, and where the agent asked for clarification. If developers can't explain an outcome, they won't trust it in production.Safe sandboxesProvide environments where teams can test with real-ish data without real-world blast radius: replay, simulation, dry-run modes, and one-click rollback. The goal is “learn fast” without “break prod.”Opinionated primitivesShip reusable UI + API building blocks for “agent status,” approvals, citations, human handoff, feedback, and undo—so every team doesn't reinvent unsafe patterns with slightly different failure modes.

These patterns reduce the cognitive load of shipping agentic behavior. The goal is to make the “right” path the easiest path, and make risky moves feel obviously risky before they hit production.

Let's have a look at a few examples.

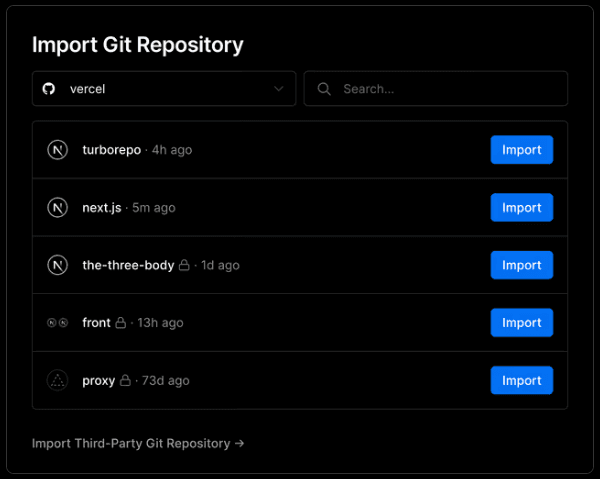

Vercel turned deployments into a default workflow. Every branch gets a preview environment. That's a DX pattern agent platforms should steal. Make “safe testing” the path of least resistance, and tie it to the habits developers already have (Git → environment → feedback → merge).

Source: Vercel Git integrations

This removes the hidden tax of “setting up a place to test.” Preview environments compress feedback loops and eliminate coordination overhead (no shared staging fights, fewer “works on my machine” debates). It also boosts developer happiness because progress becomes visible. URL you can share is instant social proof, and it aligns engineering with product/design review without extra ceremony.

In agentic platforms, previews matter even more because behavior is probabilistic. If every iteration requires a full production-like release, developers become conservative and slow. Previews let teams explore safely where they can tune prompts, adjust tool scopes, refine guardrails, and do it with realistic integration context.

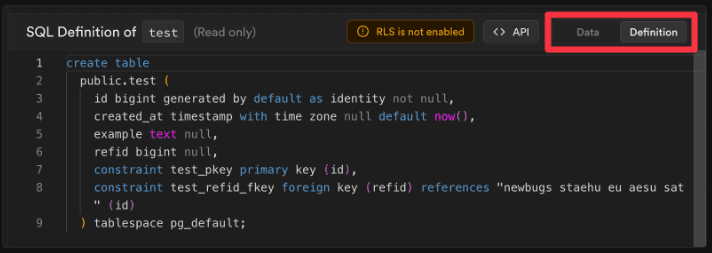

Supabase leans into Postgres as the contract where local dev workflows and migrations keep behavior consistent across environments, and generated types make mismatches show up early. You can similarly treat “what's allowed” as a first-class artifact.

Source: Supabase Local development

This effectively reduces onboarding friction. When the “contract” is your schema, the platform becomes teachable where new developers can infer behavior from types, tables, and migrations instead of reading Slack threads.

For agents, the schema analogy actually extends beyond data. You want “behavior schemas” too with tool input/output definitions, allowed action scopes, escalation rules, and approval boundaries. The more of that you can represent as structured artifacts (and validate in CI), the less your platform depends on hero engineers.

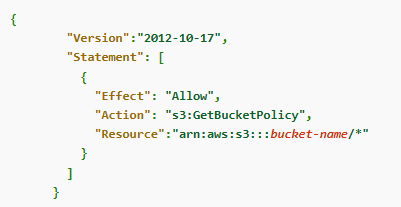

AWS ships an IAM policy simulator so teams can validate and troubleshoot authorization rules before rolling changes out.

Source: AWS IAM policy simulator

Permissioning is where agentic platforms either become enterprise-grade or become a toy. Developers don't fear complexity, they fear invisible complexity. Testable permissions reduce anxiety because teams can answer “who can do what, under which conditions” without guessing.

This also sets up your traceability story. Once you have a clear contract and enforceable boundaries, the next productivity bottleneck is debugging: when something goes wrong, can a developer see exactly where the contract was violated (or where the world didn't match expectations)?

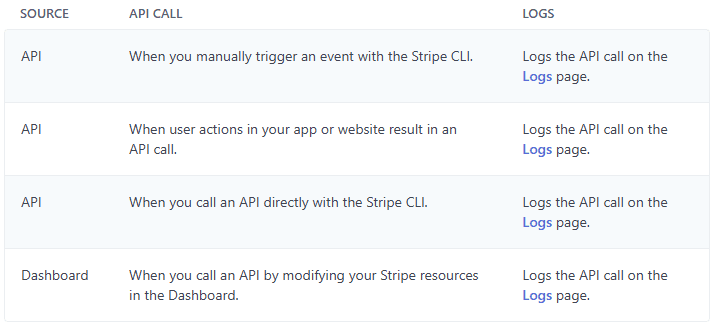

Stripe makes request logs a first-class developer surface. You can inspect what was sent, what was returned, and what failed. That's also how you should treat run traces.

Source: Stripe Request logs

Logs reduce time-to-debug. More importantly, they reduce onboarding time because they teach developers how the platform behaves in the real world rather than idealized “happy path.” In agent platforms, logs should show not just errors, but also intent, e.g. tool calls attempted, inputs used, scopes applied, and what guardrail blocked an action.

Still, logs alone often answer “what happened,” not “why did it happen.” That's where traces and causal timelines become the difference between a confident developer and a frustrated one.

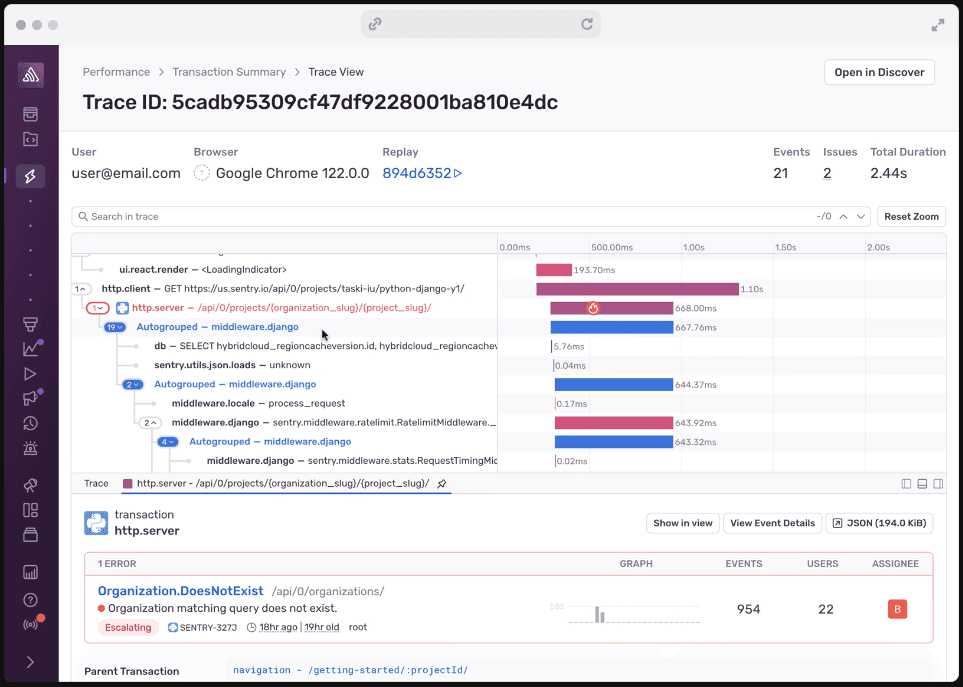

Sentry's Trace View and breadcrumbs are designed to answer the only question that matters during incidents: “what happened, in what order, and why?” You need the same ergonomics, e.g. timelines of tool calls, state changes, user approvals, and failures so teams can debug behavior.

Sources: Sentry Trace View

This is also where developer happiness shows up as a measurable operational outcome: lower MTTR, fewer escalations, and less “psychological load” during incidents. A good trace UI turns debugging into navigation.

Developers stop asking “is the model broken?” and start answering “the tool call failed because scope X blocked it,” or “retrieval returned stale context,” or “approval step was skipped due to misconfiguration.”

Once you can explain behavior, the next constraint becomes iteration speed. The best debugging tools in the world won't help if every fix requires a risky production deploy. That's why developer-first companies obsess over safe, realistic sandboxes.

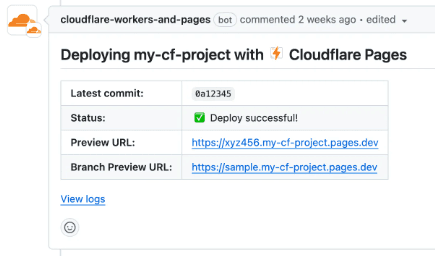

Cloudflare Workers supports preview URLs and local dev workflows so developers can iterate quickly without pushing risky changes straight to production. You should provide the teams a tight feedback loop and safe promotion paths.

Sources: Cloudflare Previews

Sandboxes increase velocity because they turn experimentation into a default behavior. When developers can replay inputs, simulate tool failures, and test different guardrails quickly, they converge on reliable designs faster and ship with less fear.

But even with great previews, eventually you have to ship to production. That's where rollout UX matters, you need confidence-building mechanisms that let teams deploy agent behavior changes without betting the company on a single release.

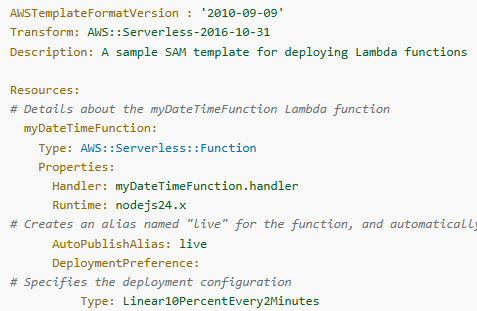

AWS Lambda weighted aliases allow gradual traffic shifting to new versions with quick rollback. This is the rollout UX agent platforms should standardize: promote behavior changes through controlled exposure, not big-bang releases.

Source: AWS Lambda alias routing

Canary rollouts reduce change failure rate and that translates directly into developer trust. Teams become willing to ship improvements because the blast radius is explicit and controllable. In agent platforms, this is especially important because behavior changes can alter cost, latency, and user trust in one move. Controlled exposure makes those tradeoffs observable before they become widespread.

Still, version-based rollout is only half the story. The other half is feature-level control, the ability to turn behaviors on/off, segment users, and iterate safely without coupling every tweak to a deployment artifact.

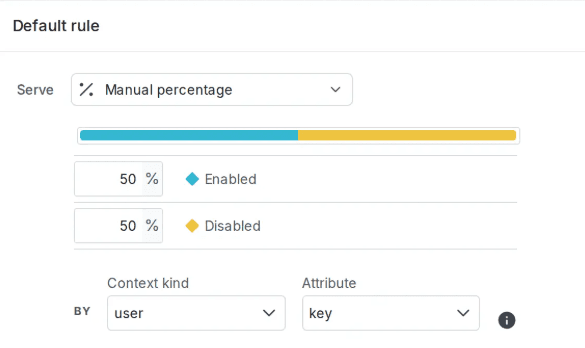

LaunchDarkly's percentage rollouts and staged releases as normal operating procedure. For agent systems, progressive delivery is how you prevent a new planner from doubling your escalations overnight.

Source: LaunchDarkly Percentage rollouts

When feature-level controls exist, teams can test hypotheses (“does this guardrail reduce escalation?”). It also improves developer happiness because the platform supports reversible decisions, you can experiment without fear of being trapped by a release.

The missing piece for many agent platforms is deterministic testing. Progressive rollouts help in production, but you still want a way to validate integration flows repeatedly without triggering real-world side effects.

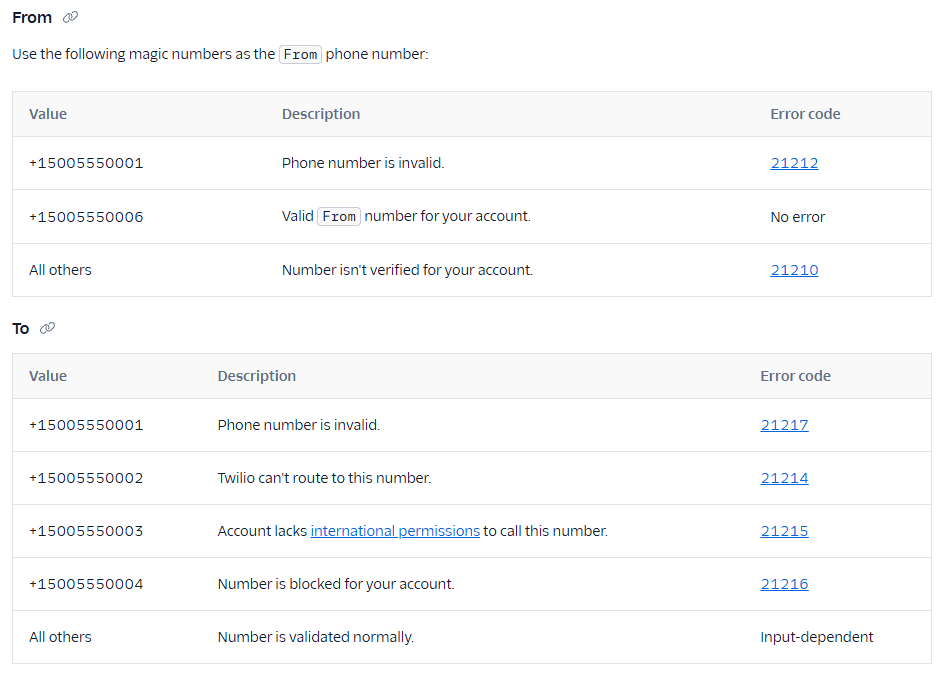

Twilio's test credentials let teams exercise integration flows without triggering real-world side effects. That's the sandbox pattern agent platforms should copy, i.e. preserve the shape of production behavior while making consequences safe and repeatable.

Source: Twilio Test credentials

Determinism reduces onboarding friction because it makes learning reproducible, new developers can run the same scenario and see the same outcomes. It also reduces operational risk because teams can build strong regression tests around “known bad” cases, exactly what agent platforms need when behavior depends on tool availability, permissions, or shifting context.

Once you can test safely, you can go further, make “preview before action” a platform primitive. This is how you prevent costly or irreversible automation mistakes, and it's a huge trust builder for both developers and operators.

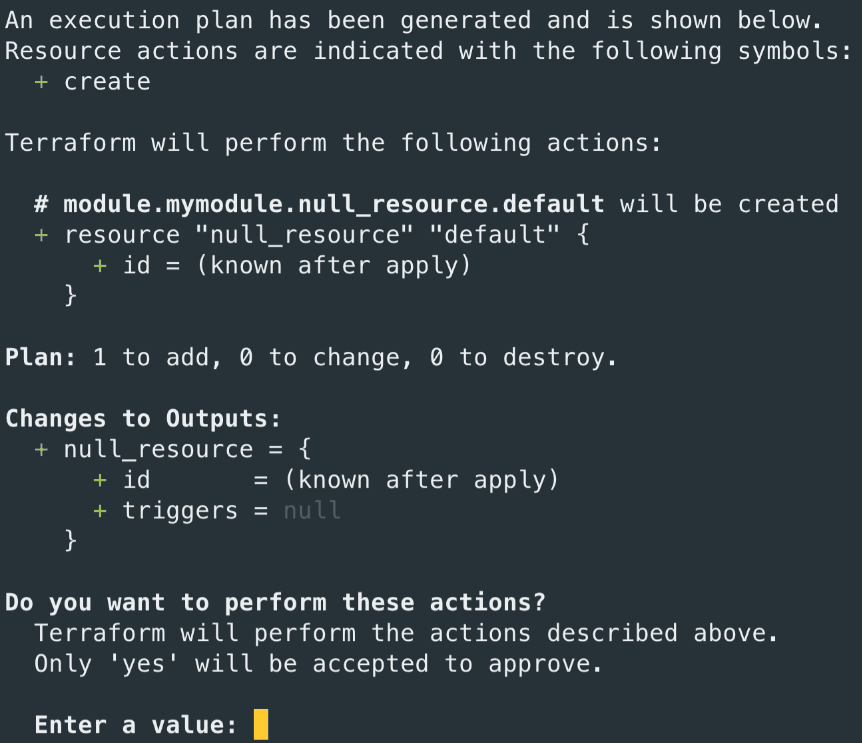

Terraform's plan makes “show me the diff before you apply it” the standard workflow. For agent platforms, this maps to previews of actions: what will change, what tools will run, what data will be touched, and what the rollback path is.

Source: Terraform plan

Previews increase developer confidence because they turn execution into an informed decision. In agent systems, “plan mode” is how you make autonomy legible. You can show the proposed tool calls, what data will be written, what permissions are required, and which steps require approval. This reduces incident volume and makes it easier for developers to defend the platform internally.

Now zoom out and notice that all of the above assumes developers can get started quickly. But many platforms lose adoption before they even reach debugging or rollout, because initial setup feels like homework. Developer-first companies treat onboarding as a first-class performance problem.

This is velocity through momentum. When teams can go from “new project” to “working demo” quickly, they develop attachment to the platform. It increases developer happiness because it respects their time and it increases adoption because stakeholders see results early. For agent platforms, a prebuilt scaffold might include tracing enabled, safe sandboxes configured, starter guardrails, and a “hello world” tool call that demonstrates approvals + undo.

Finally, none of this matters if platform evolution is painful. Developers stick with platforms that let them upgrade without dread and that requires versioning discipline. You want change to feel intentional, observable, and reversible.

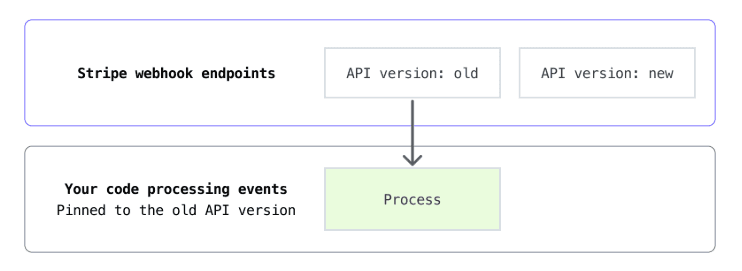

Stripe's webhook versioning guidance is a model for platform evolution without chaos. Agent platforms need the same discipline, i.e. behavior changes should be versioned, observable, and migratable so adopters move on their schedule, not yours.

Source: Stripe Webhook versioning

Developer trust is earned through predictable change. When platform behavior is versioned and observable, upgrades stop being high-stakes events and become routine maintenance. That's how you preserve velocity over time and it's a major driver of developer satisfaction with less surprises, less firefighting and more forward progress.

DX maturity curve (how platforms usually evolve)

- Start with a minimal contract (SDK + clear limits + observability) so early adopters can ship without guessing.

- Add first-class traces and safe sandboxes so debugging and iteration become routine, not heroic.

- Introduce progressive delivery (canaries, rollbacks, versioning) so behavior changes don't become support incidents.

- Standardize primitives (approvals, citations, handoff, undo) so every integration doesn't reinvent unsafe UX.

The point isn't to copy these companies verbatim. It's to copy their underlying move. Treat developer workflows as product UX. When developers can predict, test, observe, and recover from agent behavior changes, they ship the platform and they keep it shipped.

This should give you enough perspective about approaching UI/UX for your own solution, platform or product. In the next section, we will dive deep into control plane.